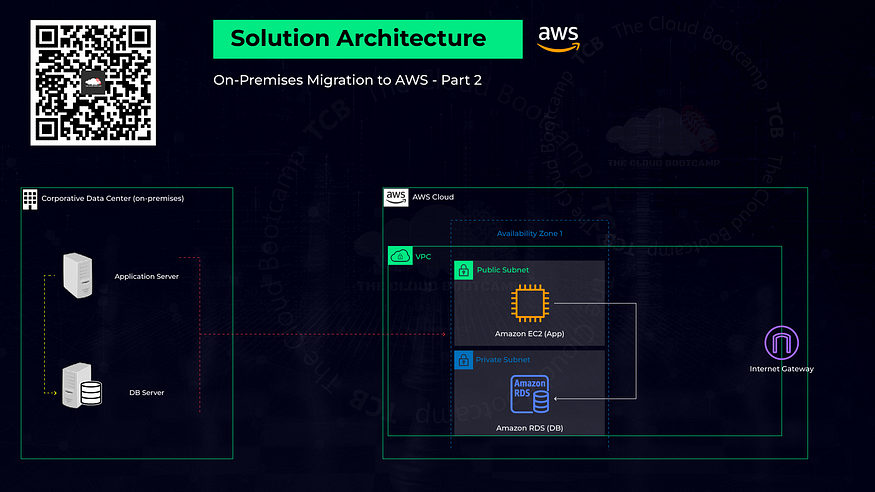

In another project based on a real-world scenario, I acted as a Cloud Specialist responsible for migrating a workload running in a Corporate Data Center to AWS. The application and MySQL database were migrated to AWS using the Lift & Shift (rehost) model, moving both application and database data.

I followed these migration steps:

· Planning (sizing, prerequisites, resource naming)

· Execution (resource provisioning, best practices)

· Go-live (validation test/ Dry-run, final migration / Cutover)

· Post Go-live (ensure the operation of the application and user access)

Planning

During the planning phase I determined the parameters of the existing application and My SQL database. For example:

· For the VM hosting the Python application: the operating system and version (Ubuntu 20.04) and the quantity of CPUs, RAM, & storage, as well as the list of applications and dependencies required for the application such as Python3, the MySQLClient, and Python packages such as Flask, etc.

· For the database: the database engine and version (MySQL 5.7.37) and its current quantity of CPUs, RAM, & storage.

I then determined the AWS infrastructure necessary to accomplish the desired solution, such as a VPC, public & private subnets, Internet Gateway, security groups, and their necessary configuration items such as IP address CIDR blocks, port numbers, and so on.

Execution

During the Execution phase, I provisioned the AWS resources. I created a new VPC and public & private subnets (including a second private subnet in a separate availability zone (AZ) for use in a future high-availability configuration), created and attached an Internet Gateway (IGW) to the VPC, and then created a new route in the VPC route table to direct all traffic to & from the Internet to the IGW.

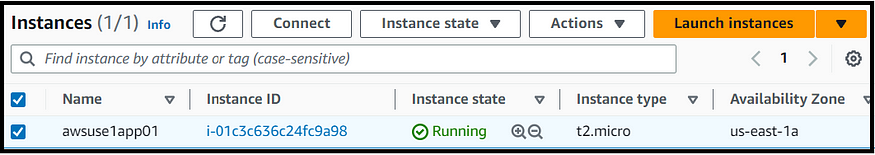

Next, I created an EC2 instance for the application server, running Ubuntu 20.04 and using the t2.micro instance type, and a new SSH key pair for the instance. I modified the instance’s network settings so that it was created in the new VPC, in the public subnet. I also added a new security group rule to allow traffic from the Internet on port 8080 for the web application.

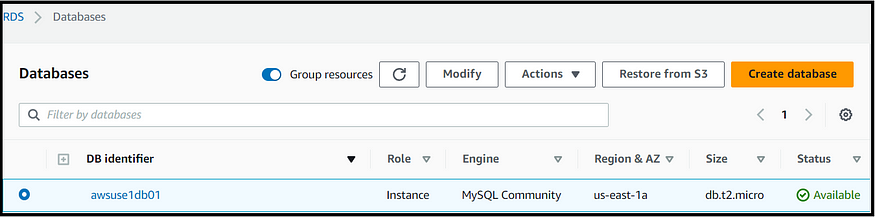

I then created an RDS instance (running MySQL 5.7.37 and using the db.t2.micro instance type) in the new VPC and created a new security group, configured to only allow access to the database from the Python application EC2 instance, so that the database would not be accessible to the Internet.

After setting up the EC2 and RDS instances, I used Git Bash and SSH to connect to the EC2 instance using the EC2 instance private key I had downloaded during the EC2 setup process. Once connected, I updated Ubuntu, and installed the additional packages for Python3, the MySQLClient, the related libraries, and used PIP to install additional packages for Python such as Flask, WTForms, etc.

Go-live

For the validation/dry-run portion of the go-live step, I needed to perform the remaining migration steps of importing the application data and database backup into AWS. To do this, I first re-connected to the EC2 instance using Git Bash and SSH and used the Wget command to download the application data and database backup files to the EC2 instance from the staging server. I established a remote connection from the EC2 instance to the RDS database endpoint, and then I created a database and imported the data from the database backup into the database. I used the ‘show tables’ command and then some select statements to confirm that the data had been successfully imported.

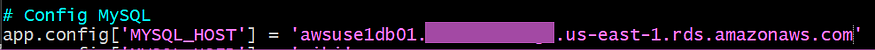

I closed the remote database connection to return to the Ubuntu EC2 SSH session, then extracted the zipped application data files. I used the Linux vi editor to edit the application’s configuration file and updated the ‘MY_SQLHOST’ entry with the name of the AWS RDS endpoint.

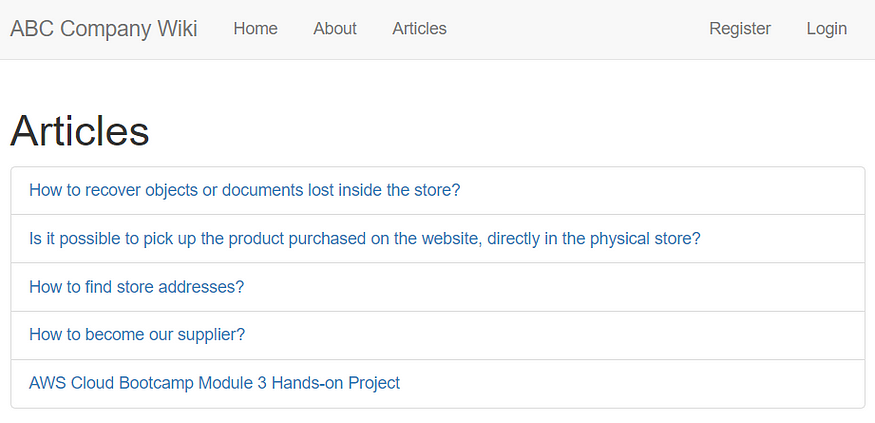

After starting the Python application on the EC2 instance, I opened a web browser and connected to the public IP of the EC2 instance and confirmed that the application loaded successfully.

I performed some additional testing such as viewing the articles posted in the application and logging in with that administrator account and creating new articles.

For this project there will be no actual final migration/cutover portion of the go-live step, but if there were I would coordinate with the application stakeholders to schedule downtime during which the existing production application would be shut down, and the AWS application environment would be made available to end users.

Post Go-live

The post go-live steps would involve making sure staff was available to support end users with connecting to the new application instance, and addressing any issues that arise.

Summary

This was an excellent project which demonstrated how powerful the capability is to quickly migrate existing applications from on-premises environments to the cloud. With proper collection of data regarding on-premises resources, and thorough planning of cloud architecture, applications can rapidly and efficiently be migrated to the cloud to enable expanded features such as auto-scaling and high availability, which can significantly improve a business’s ability to deliver its applications to its customers, providing tremendous value to a business.